India's First Sovereign AI Compute Core

Building indigenous RISC-V NPUs optimized for Indian Enterprise & Data Centers. Design in Bangalore, powering India's AI infrastructure.

10x Energy Efficiency

Our custom Matrix Extensions deliver 10x better energy efficiency than general-purpose GPUs for AI inference workloads.

Zero Licensing Fees with RISC-V

By using open-standard RISC-V architecture, we eliminate costly licensing fees while delivering cutting-edge AI performance.

Atmanirbhar Bharat - Self-Reliant India

Indigenously designed chips breaking dependency on expensive imported GPUs. Made for India, by India.

Welcome to SemiconAI

Building India's first Sovereign AI Compute Core with indigenous RISC-V NPU technology.

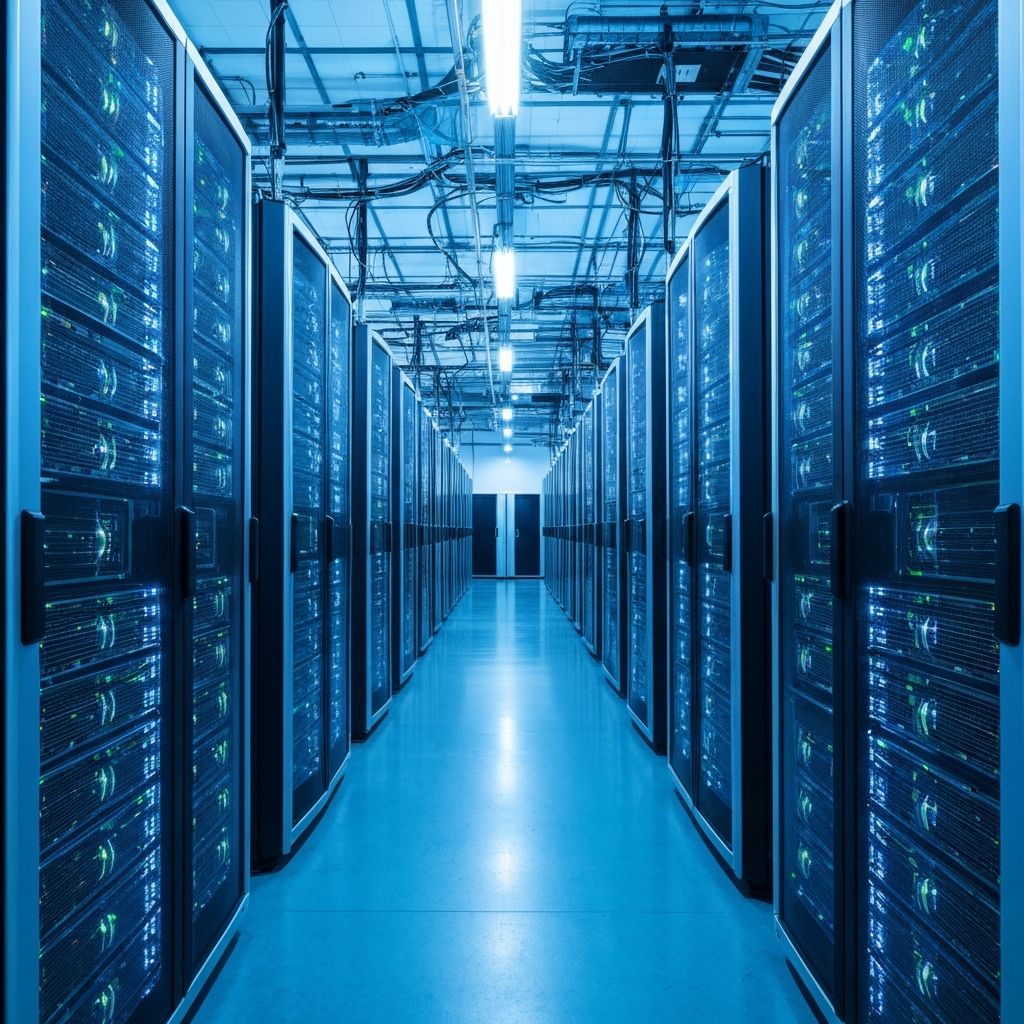

Building India's Sovereign AI Infrastructure

We are building India's first Sovereign AI Compute Core. While the world relies on expensive, power-hungry imported GPUs, SemiconAI is designing a Fabless RISC-V NPU optimized for the specific needs of Indian Enterprise & Data Centers.

By using open-standard RISC-V, we eliminate licensing costs. By designing custom Matrix Extensions, we achieve 10x better energy efficiency than general-purpose GPUs for inference workloads. We design in Pune, fabricate with global partners, and deploy to power India's AI infrastructure.

RISC-V Architecture

Open-standard architecture eliminates licensing costs and enables full customization.

10x Energy Efficiency

Custom Matrix Extensions deliver superior performance per watt for AI inference workloads.

Designed in Bangalore

Indigenous design with global fabrication partners for world-class quality.

Fabless Model

Asset-light approach focusing on innovation while leveraging global manufacturing.

Why SemiconAI?

See how our approach compares to traditional solutions from Nvidia and Intel.

| Feature | Traditional Approach (Nvidia/Intel) | SemiconAI Approach (RISC-V NPU) |

|---|---|---|

| Architecture | Proprietary (Closed Source) | RISC-V (Open Source) |

| Cost Model | High Licensing Fees + High Margins | Zero License Fees + Cost Efficient |

| Focus | General Purpose (Good at everything) | Domain Specific (Perfect for AI Inference) |

| Sovereignty | Controlled by US Tech Giants | Indigenously Designed (Atmanirbhar) |

| Flexibility | "Take it or leave it" | Customizable for specific LLMs |

Our Products

Indigenous AI silicon designed for India's needs - from enterprise inference to sovereign AI training.

SemiconAI Infer-1

The Enterprise AI Inference Accelerator

A PCIe card that plugs into existing servers, purpose-built for running AI models like Llama-3 in private data centers. Perfect for Indian enterprises who need AI capabilities without the Nvidia H100 price tag.

RISC-V Architecture

Custom Matrix Extensions for AI workloads

32GB LPDDR5

Cost-effective, fast memory for inference

Higher Tokens/$/Second

Outperforms Nvidia A10 on cost efficiency

7nm/12nm Process

Cost-optimized manufacturing

Target Market

Indian Enterprise Data Centers - Banks, E-commerce platforms, and Government institutions who want to run private LLM models but need a cost-effective alternative to expensive imported GPUs.

SemiconAI Train-X1

The "Stargate" Chip for India's AI Independence

A massive data center accelerator designed for training large foundation models. The engine that will power India's sovereign AI infrastructure.

Multi-Core RISC-V Cluster

Massively parallel architecture

128GB HBM3e

High Bandwidth Memory for training

Fabric-X Interconnect

Links 10,000+ chips together

3nm/5nm Process

Bleeding-edge fabrication

Target Market

Hyperscalers and National Supercomputers - E2E Networks, Yotta, Reliance Jio, and government initiatives building India's sovereign foundation models.

Product Roadmap

"We start by capturing the Enterprise Inference market with the cost-effective Infer-1, building our software ecosystem. We then reinvest that traction to build the Train-X1, the engine for India's AI independence."

SemiconAI Infer-1

Inference (Running Models)

SemiconAI Train-X1

Training (Building Models)

| Specification | SemiconAI Infer-1 | SemiconAI Train-X1 |

|---|---|---|

| Stage | Phase 1 (18-24 Months) | Phase 2 (3-5 Years) |

| Function | Inference (Running Models) | Training (Building Models) |

| Primary Customer | Enterprise & Private Cloud | Hyperscalers & National Supercomputers |

| Key Selling Point | Cost Efficiency (Low TCO) | Raw Performance & Sovereignty |

| Technology | 12nm / 7nm Process | 3nm Process + Chiplets |

Join the Sovereign AI Revolution

Be part of India's journey to AI self-reliance. Partner with us to build the future of indigenous AI infrastructure.